Neural Rendering Faces

Overview

This past semester I have been researching neural rendering - a field that seeks to generate images using neural networks. Recently, I have needed a lot of different headshots, so I trained a neural network that can generate pictures of a face at any desired angle.

Though some research has been done on this problem, existing methods are hard to control or laborious. My method introduces a scene rotation step that reduces the problem to an adjacent area of research that is much more active and has seen recent success with papers like ViewFormer [1]. I found that this rotation step allowed me to directly use the ViewFormer paper's existing neural networks, despite them being trained on rooms not faces. Retraining the codebook neural network of ViewFormer on the FEI face dataset [2] greatly improved the results.

The image below shows a sequence of images generated by the retrained neural network as the horizontal rotation angle requested for the face is varied.

Background

The aim of this neural network is to use reference images from a fixed camera of a rotating face to generate images of a face at a new angle with the same fixed camera.

Approaches for fixed camera and rotating face

There are 2 traditional methods for this problem of generating novel face photos with a fixed camera:

- General Adverserial Networks (GANs) [3], which are hard to control

- 3D modeling [4] [5], which requires a labourious manual 3D modelling step

Scene rotation reduction method

The field of neural rendering has focused on generating images for the:

- old problem: a moving camera around a fixed object

- rather than this current problem: a fixed camera and a rotating face.

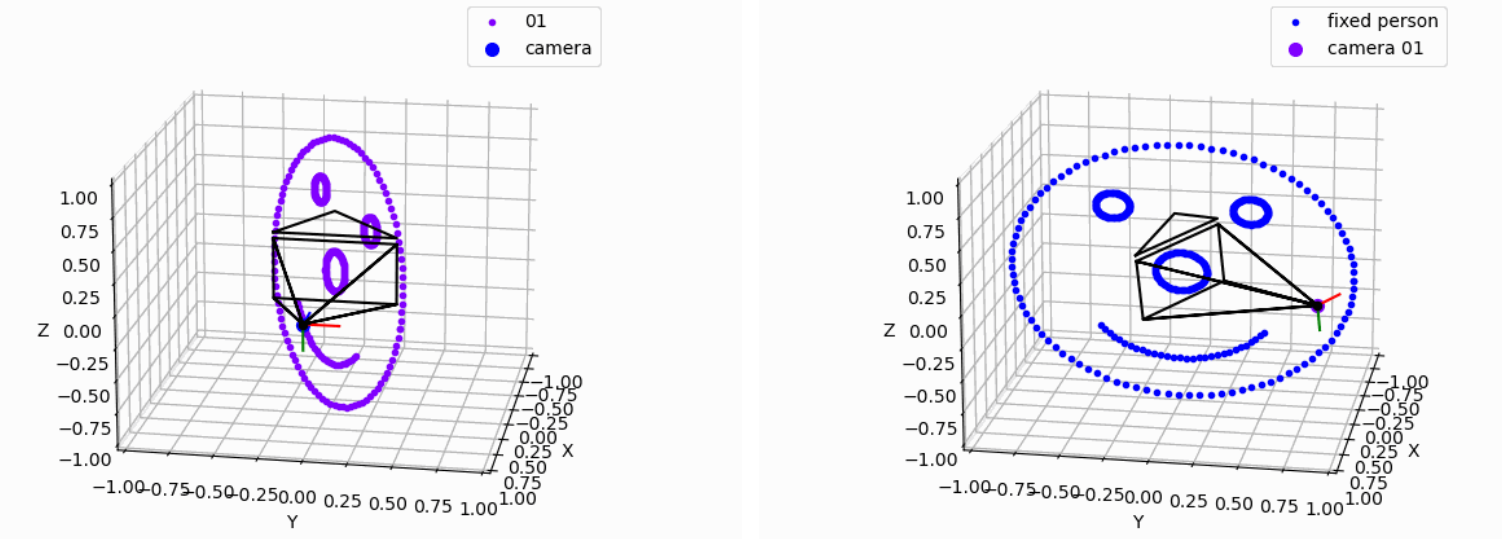

However, as the background is plain and the headshot cropped to the face, so long as the relative positioning between the face and camera are fixed you get the same picture. This is illustrated by the picture below, where both cameras see the face outline identically despite the scene rotating.

Rotating the scene allows the current problem to be reduced to the old solved problem of a moving camera in a static scene! This means I can build on existing solutions from the old reduced problem to solve this current problem.

Approaches for moving camera and fixed scene

Though many approaches exist for the reduced problem of generating images of a fixed scene from a moving camera, I will highlight NeRF [6] and ViewFormer [1] here. My work builds on the neural networks of the latter, with the addition of a scene rotation step for the problem reduction.

NeRF

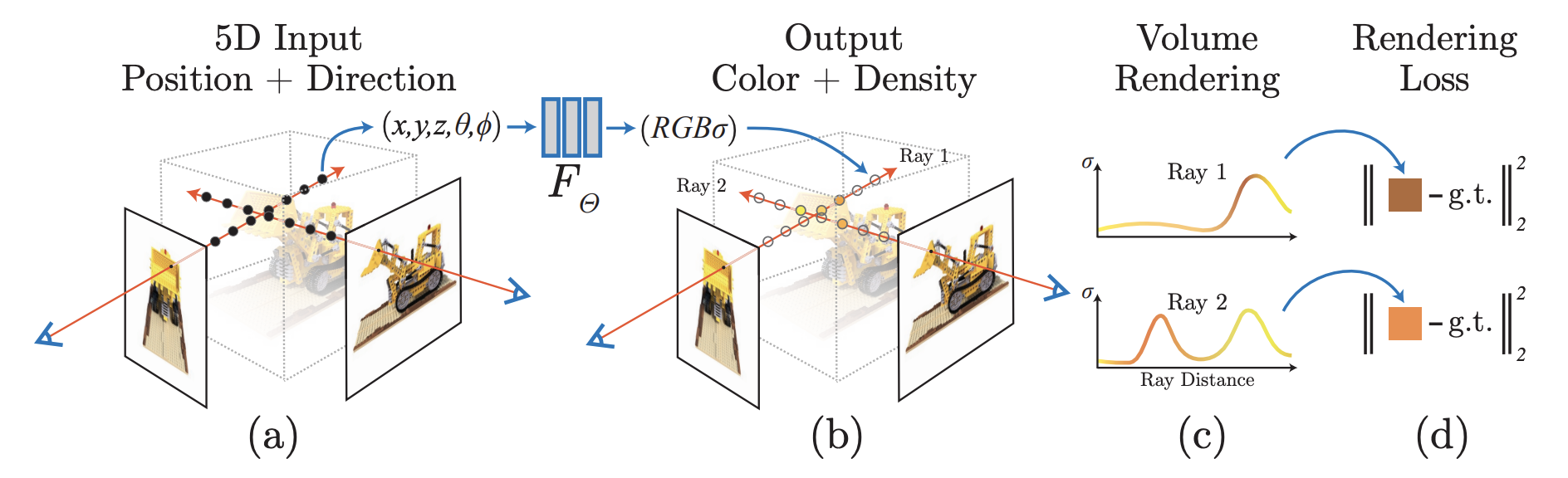

The seminal paper for a moving camera through a static scene is the Neural Radiance Fields (NeRF) paper [6]. The paper proposes:

- The scene is a cubic volume

- To render the pixels of an image, many rays are projected from a camera through the volume

- The color value for a pixel is formed by sampling multiple times along a ray

- A neural network is trained to predict the color and density of any point in the volume, such that the rendered images from different known camera locations match the known truth

This model has been highly succesful and integrated in products like Google Maps fly-through feature. However, it is slow to train given the large number of rays and samples along a ray needed for any image.

ViewFormer

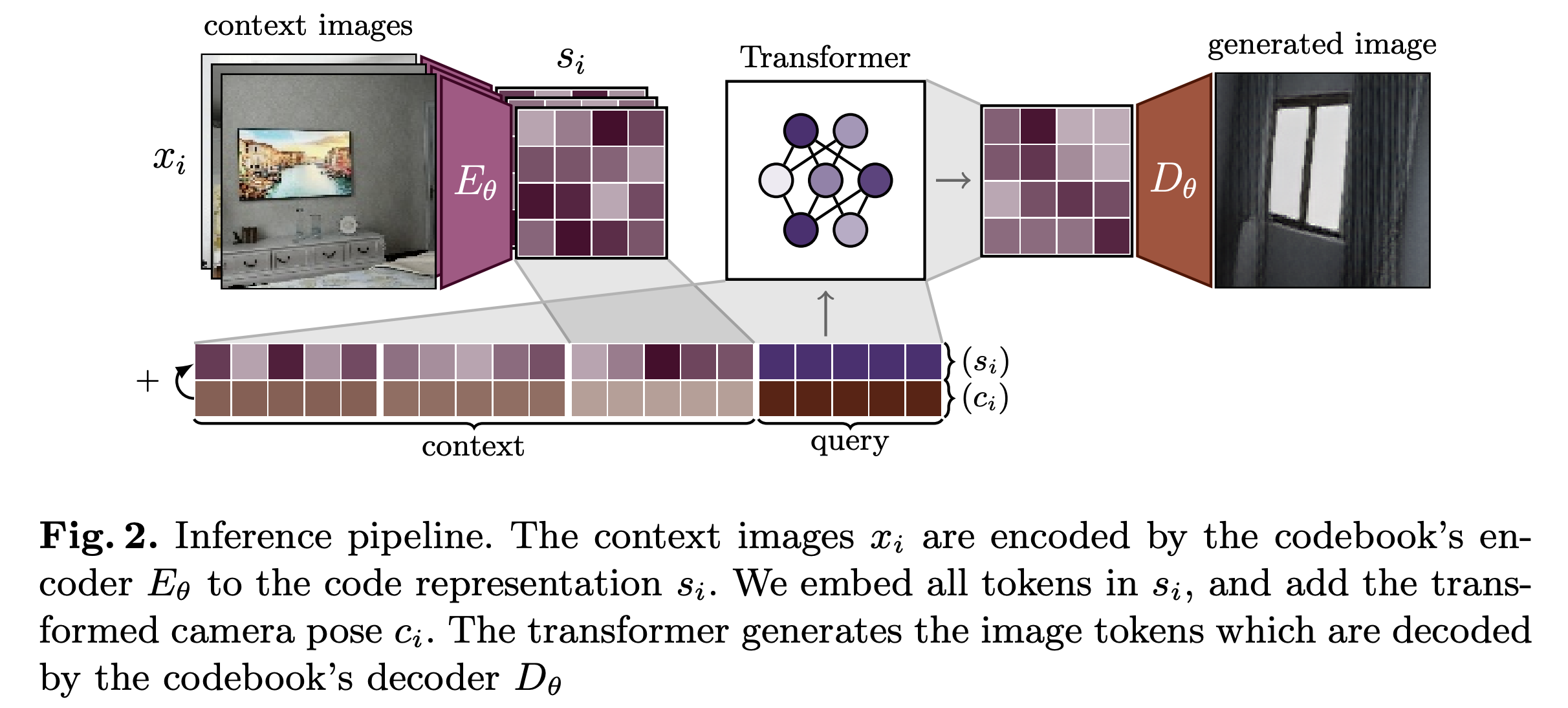

This article extends a more modern, faster approach to the old dynamic camera and static scene problem called ViewFormer [1]. Instead of the traditional rendering engine ray concept, it uses:

- a codebook neural network: a Vector Quantized Variational Autoencoder [7] to convert 1+ known context images of the object and camera positions to an embedding space

- a sequence prediction neural network: a transformer to predict an image embedding for a new requested camera position

This architecture speeds up training as the model doesn't have to sample each ray like NeRF and can separately train the codebook and the transformer. Due to these speedups, my neural network uses the ViewFormer image generation pipeline shown in the image below with the addition of the rotation step to reduce my problem to the problem of ViewFormer.

Technical Implementation

Recall the goal is for the neural network to take in:

- Some context images of a person's face to understand their facial features

- A desired new pose for their face defined in the format yaw, pitch and roll. Yaw is looking left-right, pitch is looking up-down and roll is twisting the face along the nose.

Also recall that ViewFormer takes in the context face images, the camera positions for those context images and a new desired camera position. ViewFormer will then output a predicted image for the new camera position.

Consequently the steps required are:

- Get yaw, pitch and roll of context images

- Convert all yaw, pitch and roll face poses to camera positions

- Retrain ViewFormer on new dataset

Context images

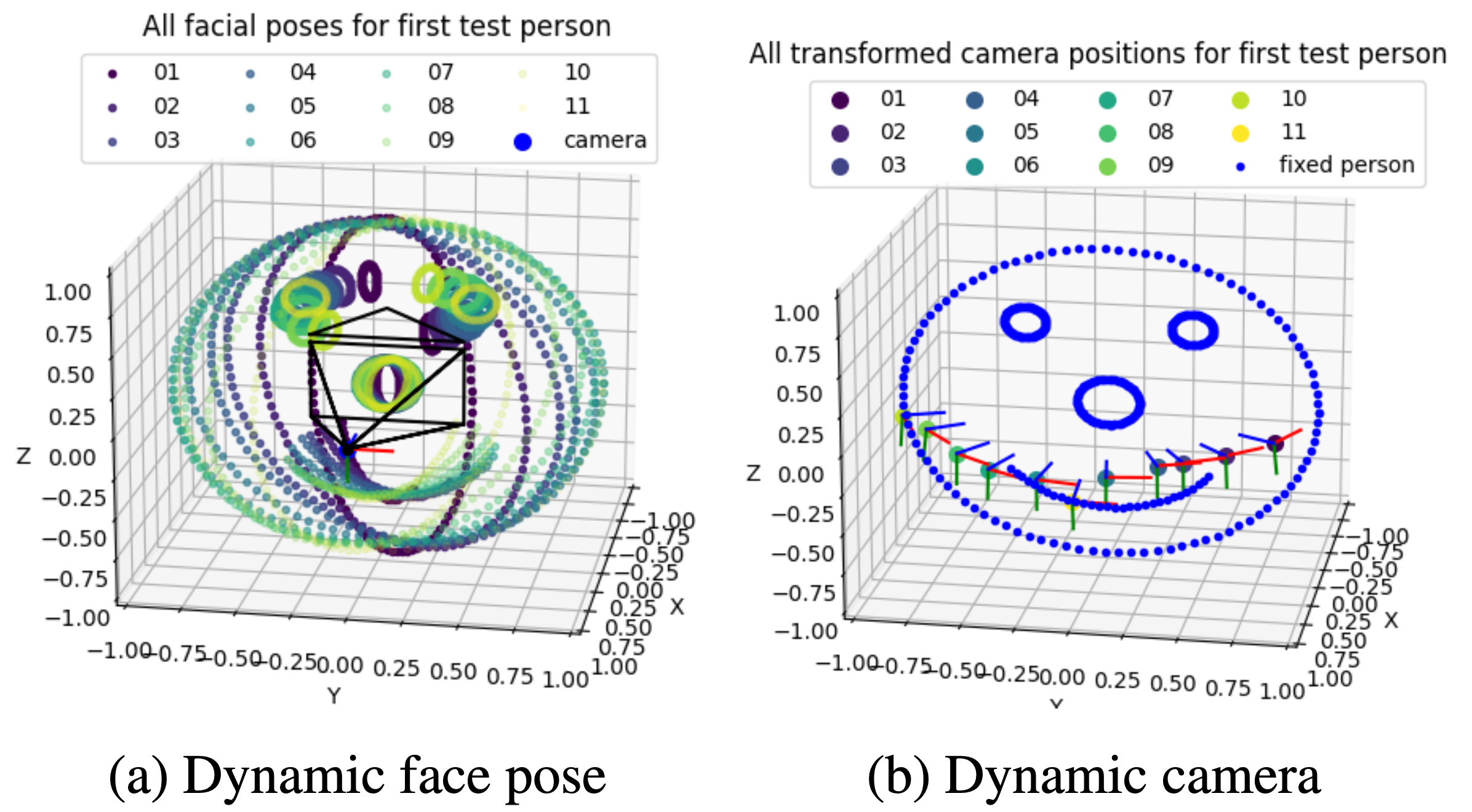

All training and test image sequences come from the FEI dataset [2]. This dataset contains sequences of participants in front of a fixed camera gradually rotating the yaw of their face across 7 images. To label the images with full yaw, pitch and roll angles I used the AWS' Rekognition API. The sequence of images for a participant can be visualised in 2 ways:

- On the left I have plotted the angle extracted for each headshot

- On the right I have rotated the scene (face and camera) for each image, such that the face is fixed across the sequence. This is compatible with ViewFormer as the camera is moving and the face is fixed!

Let's formalise this scene transform math next to reduce my problem (camera fixed) to the ViewFormer problem (camera moving).

Scene transform

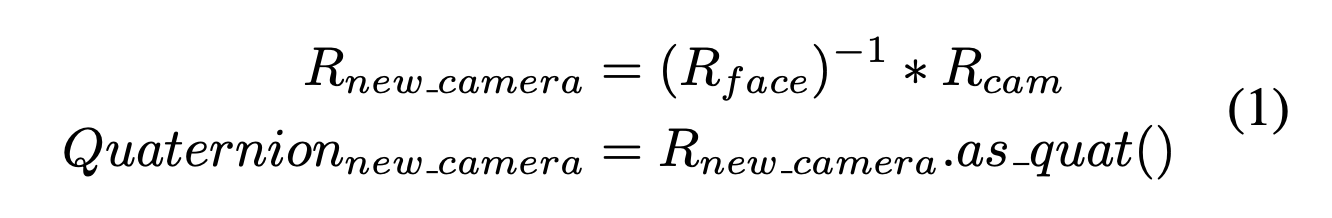

ViewFormer expects a moving camera and fixed face. The context images' pose and desired pose are in a scene with a fixed camera and moving face. I used rotation matrices and quaternions to get the 3D position of the camera and the 4D quaternion of the camera's orientation from the facial yaw, pitch and roll:

- Model the scene as a camera at (1,0,0) pointing at the origin and a face centered at (0,0,0) pointing at the camera if no rotations are applied to it

- R_face is the transformation matrix for the yaw, pitch and roll of the face. R_cam is the transformation matrix to point the camera at the origin

- An identity matrix is no rotation. Hence, to return the face to no rotation you must apply to it the inverse of R_face. As the camera must remain fixed compared to the face, you must also apply the inverse of R_face to it as shown in Equation 1

The position of the camera can also be found by applying the inverse of R_face. Using this math, it is now possible to reduce the fixed camera, moving face problem to ViewFormer's expected format of moving camera, fixed face.

Putting it together

Thus to train my face image generator neural network I needed to:

- Extract facial pose (yaw, pitch and roll) from input images using AWS Rekognition API

- Transform facial rotations into equivalent camera positions through inverse rotation transform

- Retrain ViewFormer

To generate images using the neural network:

- Convert desired output facial pose (yaw, pitch and roll) into equivalent camera positions through inverse rotation transform

- Use codebook to compress context facial images and their known poses into vectors

- Use transformer to predict desired facial pose image vector using compressed vectors from step 2

- Use codebook to decompress predicted vector from step 3 into full predicted facial image

Results

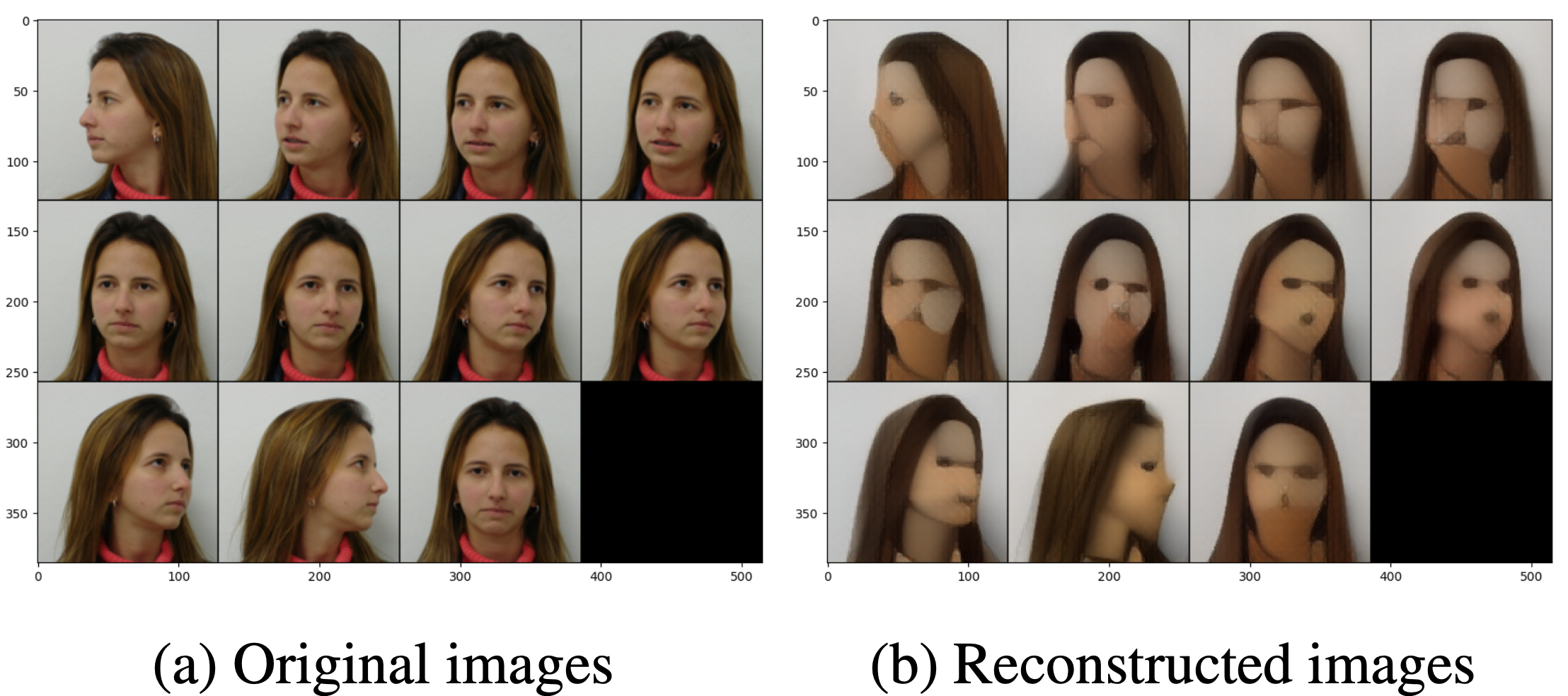

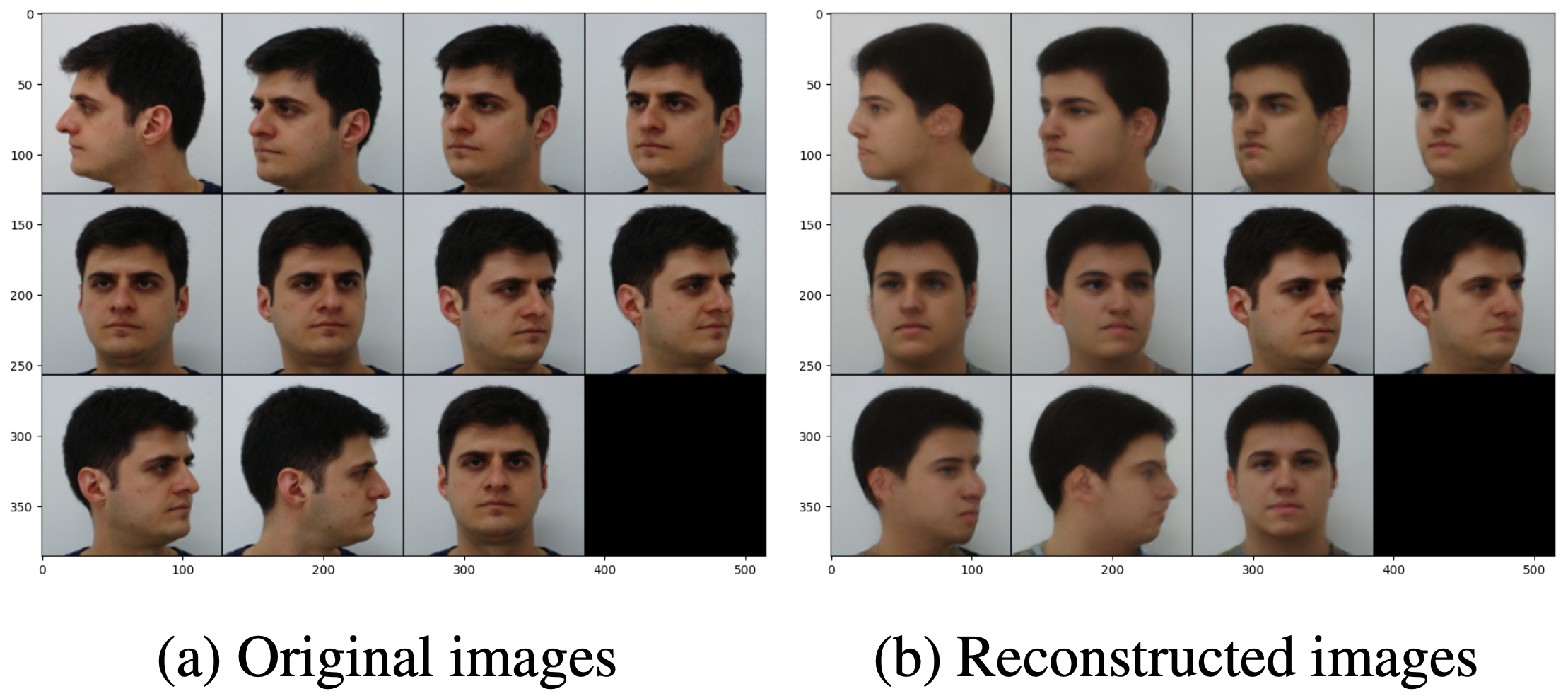

The codebook's ability to compress and decompress images from the low dimensional space sets an upper limit on the images generated. ViewFormer was originally not trained on facial images, so before finetuning the codebook (left), compressing and upscaling images destroys a lot of details in the image. I found that retraining the codebook on the FEI dataset greatly improves results (right).

Before Training Codebook

After Training Codebook

The improved codebook was used with the transformer to generate the sequence of images shown below.

Limitations & Future Work

My new model achieves my original aim of generating a face with any pose given some input context images. To improve the results further, future work could:

- Fine-tune the transformer neural network for better facial detail generation

- Add background/shoulder masking for more realistic results

For the moment though, I now have a model to correct the pose of any photo in my library!