OmniParser V2, OmniBox and OmniTool

Overview

For the past few months, I have been working with Microsoft Research on OmniParser V2 - an AI model that takes in a screenshot and predicts the coordinates and descriptions of all elements. When used in conjunction with an LLM, this allows AI to use any app (and thereby a computer) purely with vision - as a human would. Compared to OmniParser V1, OmniParser V2 is 60% faster (0.8s/image on an A100) and is more accurate at labelling common apps and icons within apps. As a result, OmniParser V2 achieves near state of the art performance on general computer use benchmarks.

I also developed a Windows 11 virtual machine to test OmniParser V2 that is 50% smaller than other open source variants. This VM is called OmniBox because a variety of apps (e.g. Chrome, VS Code, LibreOffice) come pre-installed in this box. As part of the release of OmniParser V2 we are also open sourcing OmniTool - a Windows computer use demo that combines:

- OmniParser V2

- The latest LLMs - GPT4/ o1/ o3-mini/ R1/ Qwen2.5VL/ Claude

- OmniBox

You can find OmniTool here. OmniTool removes the need to develop custom code for LLMs to use a variety of apps. Instead, it is all under 1 framework. Neat!

Background

This past year, the AI industry has focused on adding tools to LLMs so they can act more intelligently. This is sensible - you wouldn't expect an engineer to do calculations without a calculator or a researcher to stay up to date in a field without the ability to search the internet! However, this has led to the proliferation of one-off tools that interact with apps through code rather than seeing and clicking like a human. Similarly to programming an autonomous car with one-off functions like changing lane, one-off tools for using a computer will not scale. Instead, we must teach AI to interact with a computer like humans do - with a screen, mouse and keyboard.

There are 2 traditional methods for AI agents to predict coordinates and use a computer

- Accessibility APIs and DOM trees

- End-to-end VLMs

Accessibility APIs and DOM trees

The first method for computer use relies on calling existing APIs to extract interactable boxes and labels.

Modern browsers and operating systems (e.g. MacOS orWindows) provide the ability for a user to hover over UI elements and have their computer speak back what is under their cursor. Under the hood, this feature relies on Accessibility APIs that break down the visible screen area into boxes with labels. If a user's cursor moves into a box, the computer will read back the label. Though this method accurately identifies boxes and labels, not all developers have the bandwidth to tag all UI elements and make their site or app fully accessible. This leads to some sites or apps being unusable.

An alternative approach to finding boxes in the browser is to scan the DOM tree for elements that are interactive. This has the benefit of working across all websites, because browsers only render this DOM tree information. Unfortunately, this limits us to the browser rather than taking advantage of the plethora of OS apps and features.

End-to-end VLMs

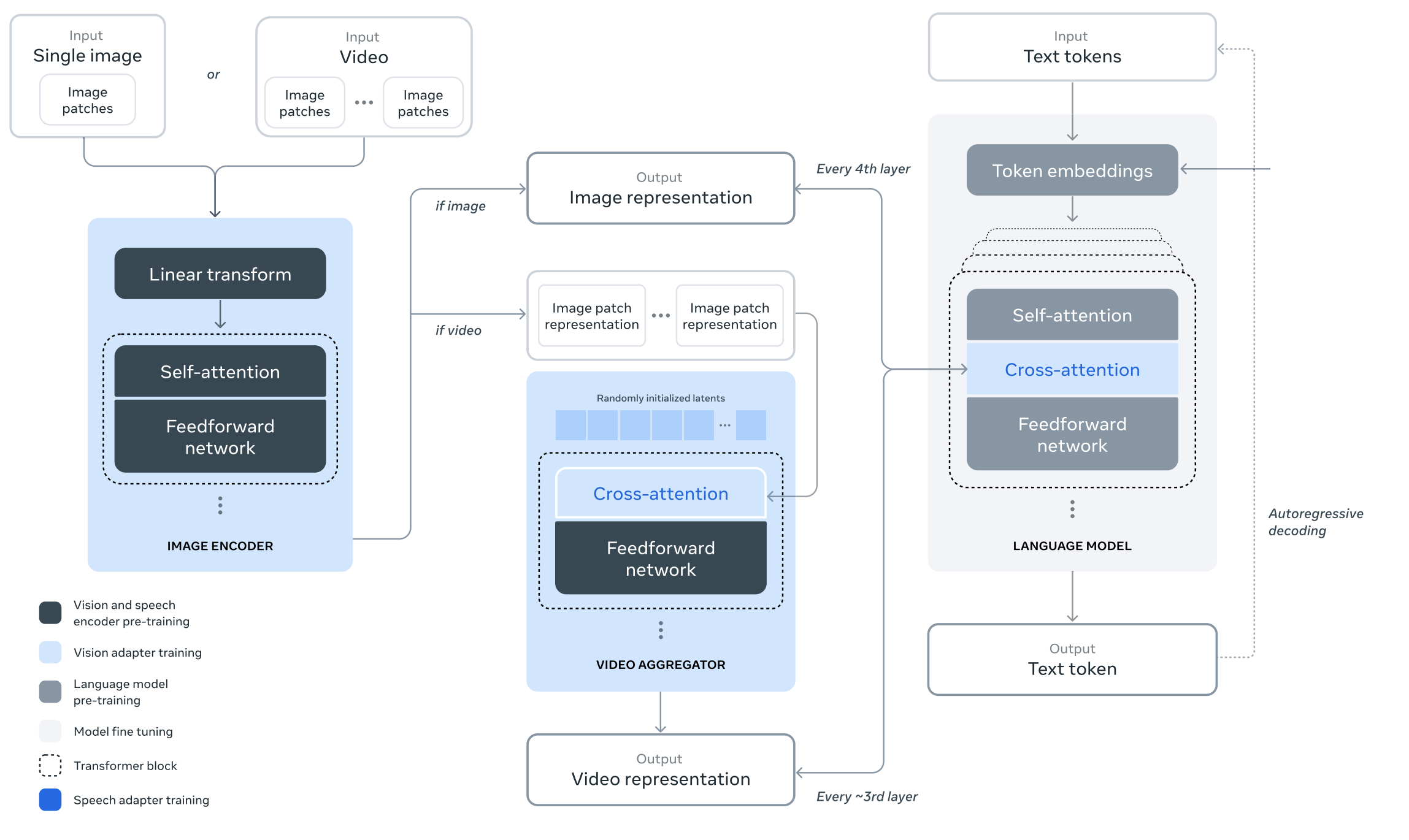

To make LLMs multimodal, a pre-trained visual model (e.g. CLIP for Phi-3.5) is attached to an existing text backbone with some intermediate layers. With enough images and labels, the intermediate neural network layers can be trained to convert the visual encoder output into the token space of the text backbone as shown in the image below from the Llama 3 paper.

Current vision encoders are often trained to maximise their descriptive ability (e.g. contrastive loss) and trained on datsets that enhance a model's descriptive ability. Consequently, current VLMs tend to be poor at coordinate prediction and it is unclear how much data is needed for such a model to be good at coordinate prediction. Additionally, if new apps/ icons come out, the question arises on how to update the knowledge of the model - do you have to start training again? Nevertheless, some VLMs do exist with native coordinate prediction e.g. Claude, Gemini or QwenVL. However, their knowledge of common icons/ apps is poor and they often hallucinate random boxes. Larger versions of these models perform better, but still suffer from the same problems and are too costly and slow for every day use.

OmniParser V2 Release

The release of OmniParser V2 is actually the release of 3 components - OmniParser V2, OmniBox and OmniTool.

OmniParser V2

OmniBox

OmniTool

OmniParser V2

Like the end-to-end VLMs, OmniParser V2 doesn't rely on platform specific APIs - it operates directly on the pixels. However, unlike the VLMs, OmniParser V2 is small - based on YOLO, OCR and Florence models. This gives us 2 benefits:

- OmniParser V2 works across any app or operating system

- Fast inference and cheap to retrain as new apps come out

We also benefit from the Accessibility API work to collect training data (boxes and labels) for OmniParser V2 from a variety of sources so that it is effective on both the web and the OS. More details on this will come soon.

OmniBox

OmniBox is at its core a Windows 11 VM with a computer control server running in it. Though alternative implementations of this exist e.g. OSWorld or WindowsAgentArena, OmniBox is 50% the size (20Gb storage + 400Mb docker image) resulting in faster build times and simpler usage. Potentially in the future we could give every agent a computer, like with employees today! Our implementation is based on the Dockur/Windows project with customisations to bring your own ISO.

OmniTool

OmniTool brings together OmniParser V2 and OmniBox to build a demo of controlling your computer through prompting using any VLM! Currently the user flow is as follows:

- Choose your VLM for reasoning, enter your API key and enter your prompt. Hit submit.

- A screenshot from the server in OmniBox is sent to OmniParser V2

- The labels from OmniParser V2 are sent to the VLM, which then chooses an action

- The action is executed and we go back to step 2. If the prompt is complete, no actions are given and the program exits

We currently support GPT4/ o1/ o3-mini/ R1/ Qwen2.5VL/ Claude. OmniTool can be found here.

Results

We achieve near state of the art performance on ScreenSpot Pro. For more details check the OmniParser GitHub page or Microsoft Research blog

Risks and Mitigations

To align with the Microsoft AI principles and Responsible AI practices, we conducted risk mitigation by using Responsible AI data when training the icon caption model. This helps the model avoid inferring sensitive attributes (e.g.race, religion etc.) of the individuals which happen to be in icon images as much as possible. At the same time, we encourage user to apply OmniParser only for screenshot that does not contain harmful/violent content. For OmniTool, we conducted a threat model analysis using Microsoft Threat Modeling Tool. Finally, we advise a human to stay in the loop when using OmniTool in order to minimize risk.

Limitations and Future Work

OmniParser V2 is significantly faster than V1 and creates much better labels. Some icons still are mislabelled though and future work will investigate ways to reduce this mislabelling. Additionally, some icons are grouped together by the box identification stage, making labelling difficult.

OmniTool is a fantastic playground for OmniParser V2, OmniBox and the latest VLMs. It highlights the effectiveness of the current models to achieve a wide range of tasks and the power of using any app on the OS. However, it also highlights 2 gaps in existing VLMs:

- Knowledge: if an option is currently hidden, instructions must be specific for OmniTool to find them

- Reasoning over failures: if one method failed the model keeps retrying it rather than finding other solution

Some of these gaps could be mitigated by extending our demo:

- New models with better computer knowledge

- Reasoning steps

- More keyboard/ mouse actions

For the moment, this release enables computer use outside of the browser with any VLM. It is super fast and has a Windows playground to test it in!